http://fortune.com/2014/12/08/oil-prices-drop-impact/

Oil price drops: Don't panic, really

COMMENTARY by Cyrus Sanati @beyondblunt DECEMBER 8, 2014, 12:32 PM EST

Oil prices have a lot more room to fall before things get really scary. Here’s why.

The recent drop in crude prices won’t kill off the US shale oil industry. It’ll just make it more efficient.

Profit margins and break-even points are relative not only to the price of oil, but also to the cost of doing business. As oil prices drop, producers will undoubtedly renegotiate their ludicrously expensive oil service contracts, slash wages for their workforce and cut perks to bring their costs in line with the depressed price for crude. The demand for oil remains strong, which should provide an adequate floor for producers in the long run, but only after they get their finances in order.

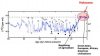

How oil prices ever reached $100 a barrel still remains a mystery to many who have followed the industry for years. But the 40% drop in oil prices over the past six months has been shocking for oil bears and bulls alike. Why on earth did it fall so hard, so fast? There is plenty of speculation, ranging from the Saudi’s wish to “crush” the U.S. shale industry, to the U.S. colluding with the Saudi’s to flood the market in order to bankrupt an aggressive Russia and an obstinate Iran.

Conspiracy theories aside, the fact is oil prices have dropped and they may stay “low” for a while. This has analysts, journalists, and pundits running around claiming that it’s the end of the world.

It is understandable that people are nervous. After all, the oil industry is a major producer of jobs and wealth for the U.S. It contributes around $1.2 trillion to U.S. GDP and supports over 9.3 million permanent jobs, according to a study from The Perryman Group. Not all that money and jobs come directly from the shale oil industry or even the energy industry as a whole but instead derive from the multiplier effect the industry has on local economies. Given this, it’s clear why any drop in the oil price, let alone a 40% drop, is cause for concern.

Nowhere in the U.S. is that concern felt more acutely than in Houston, Texas, the nation’s oil capital. The falling price of crude hasn’t had a major impact on the city’s economy, at least not yet. But people, especially the under-40 crowd—the Shale Boomers, as I call them—are starting to grow very worried. At bars and restaurants in Houston’s newly gentrified East End and Midtown districts, you often hear the young bucks (and does) comparing notes on their company’s break-even points with respect to oil prices. Those who work for producers with large acreage in the Bakken shale in North Dakota are saying West Texas Intermediate (WTI) crude needs to stay above $60 a barrel for their companies to stay in the black. Those who work for producers with large acreage in the Eagleford shale play in south Texas say their companies can stay above water with oil as low as $45 to $50 a barrel.

Both groups say that they have heard their companies are starting to walk away from some of the more “speculative” parts of their fields, which translates to a decrease in production, the first such decrease in years. This was confirmed Wednesday when the Fed’s Beige Book noted that oil and gas activity in North Dakota decreased in early November due to the rapid fall in oil prices. Nevertheless, the Fed added the outlook from “officials” in North Dakota “remained optimistic,” and that they expect oil production to continue to increase over the next two years.

What are these “officials,” thinking? Don’t they worry about the break-even price of oil? Sure they do, but unlike the Shale Boomers, they also probably remember drilling for oil when it traded in the single digits, which really wasn’t that long ago. For these seasoned oil men, crude at $60 a barrel still looks mighty appealing.

Doug Sheridan, the founder of EnergyPoint Research, which conducts satisfaction surveys, ratings, and reports for the energy industry, recalls when he had lunch with an oil executive of a major energy giant 10 years ago who confided in him that his firm was worried that oil prices had risen too high, too fast. “He was concerned that the high prices would attract negative attention from the press and Congress,” Sheridan told Fortune. “The funny thing was, oil prices were only around $33 a barrel.”

The shale boom has perpetuated the notion that drilling for oil, especially in shale formations, is somehow super complicated and expensive. It really isn’t. Fracking a well involves just shooting a bunch of water and chemicals down a hole at high pressure—not exactly rocket science. The drilling technique has been around since the 1940s, and the energy industry has gotten very good at doing it over the decades. Recent advances in technology, such as horizontal drilling, have made fracking wells even easier and more efficient.

But even though drilling for oil has become easier and more efficient, production costs have gone through the roof. Why? There are a few reasons for this, but the main one is the high price of oil. When oil service firms like Halliburton and Schlumberger negotiate contracts with producers, they usually take the oil price into consideration. The higher the oil price, the higher the cost for their services. This, combined with the boom in cheap credit over the last few years, has increased demand for everything related to the oil service sector—from men to material to housing. In what other industry do you know where someone without a college degree can start out making six figures for doing manual labor? You can in the oil and gas sectors, especially in places like Western North Dakota. There, McDonald’s employees make $20 an hour and rent for a modest place can top $2,000 a month.

But as the oil price drops, so will costs, bringing the “break-even” price down with it. Seasoned oil men know how to get this done—it involves a little Texas theater, which is sort of like bargaining at a Turkish bazaar. The producers will first clutch their hearts and tell their suppliers that they simply cannot afford to drill any more given the sharp slump in oil prices. Their suppliers will offer a slight discount on their services but the producer will say he’s “walking away.” This is where we are in the negotiating cycle.

After letting the oil service firms sweat a bit (traditionally around two to four months), a producer will give their former suppliers a call, saying they are “thinking” of getting back in the game. Desperate for work, the suppliers will now be willing to renegotiate a whole new agreement based on a lower oil price. The aim of the new contract is to give producers close to the same margin they had when prices were much higher. Profits are restored and everyone is happy.

This negotiation will happen across all parts of the oil and gas cost structure. So welders who were making $135,000 a year will probably see a pay cut, while the administrative staff back at headquarters will probably miss out on that fat bonus check they have come to rely on. Rig workers and engineers will see their pay and benefits slashed as well. Anyone who complains will be sent to Alaska or somewhere even worse than Western North Dakota in the winter, like Siberia (seriously). And as with any bursting bubble, asset prices will start to fall for everything from oil leases to jack-up rigs to townhouses in Houston. Oh, and that McDonald’s employee in Western North Dakota will probably need to settle for $15 an hour.

But oil production will continue, that is, until prices reach a point at which it truly makes no sense for anyone to drill anywhere.

So, what is the absolute lowest price oil can be produced for in the U.S.? Consider this—fracking last boomed in the U.S. back in the mid-1980s, when a barrel of oil fetched around $23. That is equivalent to around $50 a barrel today, when adjusted for inflation. That fracking boom went bust after prices fell to around $8 a barrel, which is worth around $18 in today’s money. With oil last week hitting $63 a barrel, it seems that prices have a lot more room to fall before things get really scary.