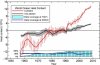

Dr Mears’ results are summarized in Fig. 4:

clip_image008

Figure 4. Output of 33 IPCC models (turquoise) compared with measured RSS global temperature change (black), 1979-2014. The transient coolings caused by the volcanic eruptions of Chichón (1983) and Pinatubo (1991) are shown, as is the spike in warming caused by the great el Niño of 1998.

Dr Mears writes:

“The denialists like to assume that the cause for the model/observation discrepancy is some kind of problem with the fundamental model physics, and they pooh-pooh any other sort of explanation. This leads them to conclude, very likely erroneously, that the long-term sensitivity of the climate is much less than is currently thought.”

Dr Mears concedes the growing discrepancy between the RSS data and the models, but he alleges “cherry-picking” of the start-date for the global-temperature graph:

“Recently, a number of articles in the mainstream press have pointed out that there appears to have been little or no change in globally averaged temperature over the last two decades. Because of this, we are getting a lot of questions along the lines of ‘I saw this plot on a denialist web site. Is this really your data?’ While some of these reports have ‘cherry-picked’ their end points to make their evidence seem even stronger, there is not much doubt that the rate of warming since the late 1990s is less than that predicted by most of the IPCC AR5 simulations of historical climate. … The denialists really like to fit trends starting in 1997, so that the huge 1997-98 ENSO event is at the start of their time series, resulting in a linear fit with the smallest possible slope.”

In fact, the spike in temperatures caused by the Great el Niño of 1998 is largely offset in the linear-trend calculation by two factors: the not dissimilar spike of the 2010 el Niño, and the sheer length of the Great Pause itself.

Curiously, Dr Mears prefers the much-altered terrestrial datasets to the satellite datasets. However, over the entire length of the RSS and UAH series since 1979, the trends on the mean of the terrestrial datasets and on the mean of the satellite datasets are near-identical. Indeed, the UK Met Office uses the satellite record to calibrate its own terrestrial record.

The length of the Great Pause in global warming, significant though it now is, is of less importance than the ever-growing discrepancy between the temperature trends predicted by models and the far less exciting real-world temperature change that has been observed. It remains possible that el Nino-like conditions may prevail this year, reducing the length of the Great Pause. However, the discrepancy between prediction and observation continues to widen.

IPCC’s First Assessment Report predicted that global temperature would rise by 1.0 [0.7, 1.5] Cº to 2025, equivalent to 2.8 [1.9, 4.2] Cº per century. The executive summary asked, “How much confidence do we have in our predictions?” IPCC pointed out some uncertainties (clouds, oceans, etc.), but concluded:

“Nevertheless, … we have substantial confidence that models can predict at least the broad-scale features of climate change. … There are similarities between results from the coupled models using simple representations of the ocean and those using more sophisticated descriptions, and our understanding of such differences as do occur gives us some confidence in the results.”

That “substantial confidence” was substantial over-confidence. For the rate of global warming since 1990 – the most important of the “broad-scale features of climate change” that the models were supposed to predict – is now below half what the IPCC had then predicted.

Is the ocean warming?

One frequently-discussed explanation for the Great Pause is that the coupled ocean-atmosphere system has continued to accumulate heat at approximately the rate predicted by the models, but that in recent decades the heat has been removed from the atmosphere by the ocean and, since globally the near-surface strata show far less warming than the models had predicted, it is hypothesized that what is called the “missing heat” has traveled to the little-measured abyssal strata below 2000 m, whence it may emerge at some future date.

Yet to date no empirical, theoretical or numerical method, complex or simple, has yet successfully specified mechanistically either how the heat generated by anthropogenic greenhouse-gas enrichment of the atmosphere has reached the deep ocean without much altering the heat content of the intervening near-surface strata or how the heat from the bottom of the ocean may eventually re-emerge to perturb the near-surface climate conditions that are relevant to land-based life on Earth.

Most ocean models used in performing coupled general-circulation model sensitivity runs simply cannot resolve most of the physical processes relevant for capturing heat uptake by the deep ocean. Ultimately, the second law of thermodynamics requires that any heat which may have accumulated in the deep ocean will dissipate via various diffusive processes. It is not plausible that any heat taken up by the deep ocean will suddenly warm the upper ocean and, via the upper ocean, the atmosphere.

If the “deep heat” explanation for the hiatus in global warming is correct (and it is merely one among dozens that have been offered), then the complex models have failed to account for it correctly: otherwise, the growing discrepancy between the predicted and observed atmospheric warming rates would not have become as significant as it has.

Besides, the 3500 automated Argo bathythermograph buoys have a resolution equivalent to taking a single temperature and salinity profile in Lake Superior less than once a year: and before Argo came onstream in the middle of the last decade the resolution of oceanic temperature measurements was considerably poorer even than that, especially in the abyssal strata.

Finally, though the ARGO buoys measure ocean temperature change directly, before publication NOAA craftily convert the temperature change into zettajoules of ocean heat content change, which make the change seem larger. Converting the ocean heat content change back to temperature change reveals just how little ocean warming is occurring.

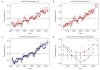

Is some underlying rate of global warming captured by the ocean temperature measurements? Well, the terrifying-sounding heat content change of 260 ZJ from 1970 to 2014 is equivalent to just 0.2 K/century of global warming.

clip_image010

Figure 5. Ocean heat content change, 1957-2013, in Zettajoules from NODC Ocean Climate Lab:

http://www.nodc.noaa.gov/OC5/3M_HEAT_CONTENT. The heat content has been converted back to the ocean temperature changes in fractions of a Kelvin that were originally measured. NOAA’s conversion of the minuscule temperature change data to Zettajoules, combined with the exaggerated vertical aspect of the graph, has the effect of making a very small change in ocean temperature seem considerably more significant than it is.